Mark Zuckerberg on Twitter fact-checking Trump: Private companies shouldn't be the arbiter of truth

Watch Dana Perino's interview with Facebook's Mark Zuckerberg on Thursday, May 28 on 'The Daily Briefing.'

Get all the latest news on coronavirus and more delivered daily to your inbox. Sign up here.

When it comes to violating Facebook's guidelines, it is often anyone's guess what will fly and what will fall.

As President Trump's rhetoric against social media companies and censorship gains momentum following Twitter's decision to red flag some of his posts for containing false information or glorifying violence, troubling questions still plague Facebook's mixed censorship policies.

"Facebook has a massive set of content guidelines that are so broad and extensive they could ban almost any content," Dan Gainor, VP of Business and Culture Media Research Center, told Fox News. "In addition, Facebook just announced a new Oversight Board that will handle content appeals. That board is overwhelmingly international and lacks American support for First Amendment ideals."

Nonetheless, Facebook CEO Mark Zuckerberg has sought to distance his platform from the President's Twitter feud, insisting in an interview on Fox News this week that his company has "a different policy than Twitter," and that they are "stronger on free expression" than other tech giants.

Earlier this week, Trump announced he would introduce legislation – by executive order – that would diminish a law that ultimately protects Internet companies and social media conglomerates such as Twitter and Facebook from statutory liability protections, and would cut federal funding for tech companies that engage in censorship and political conduct.

But for foreign governments and interests groups, there may be more wiggle room, analysts contend.

For example, Iran-backed groups have had a long track record of using the platform for propaganda – and lethal – purposes, of which both the shadowy outfits behind them and Facebook itself has seemingly profited.

Last year, Facebook was filled with "sponsored" content featuring signs "Death to America."

"Death to America" sponsored content on Facebook (Steven Nabil screenshot)

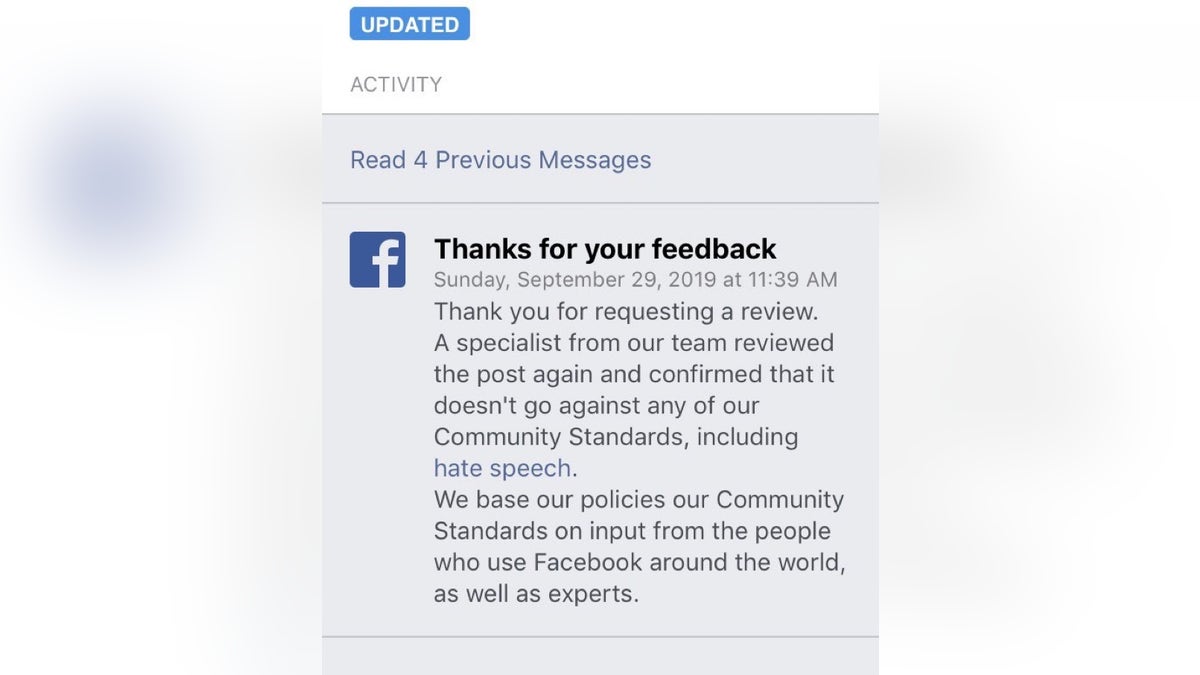

According to several Iraqis who reported the advertising as a violation, they were instead given a "thank you for requesting a review" message.

"A specialist from our team reviewed the post again and confirmed that it doesn't go against our Community Standards, including hate speech," the response read. "We base our policies our Community Standards on input from the people who use Facebook around the world, as well as experts."

(Steven Nabil screenshot)

Gainor pointed to the hypocrisy.

"The problem is Facebook allowed those ads but banned pro-life ads in Ireland during the campaign whether to allow abortion or not. Facebook isn't consistently pro-freedom at all," he said. "And that's what's scary."

THE AFTERMATH OF QASSEM SOLEIMANI'S DEATH AND HIS DAUGHTER'S RISE TO PROMINENCE

Weeks after those ads started circulating, violent protests broke out in the streets of Baghdad, and more content was being pushed, urging a retaliation against protestors who had taken to the streets of Baghdad and the south of Iraq to fight for freedoms and the removal of Iranian meddling. Ultimately, hundreds were killed when pro-Iran militias fired back on them.

According to U.S.-based Iraqi journalist Steven Nabil, who found himself on the targeting end of militia groups and had his own page shuttered for 30 days when he tried to speak out over the brewing unrest in his homeland and with no way to appeal the ban to Facebook, it was in September 2019 that slain Iranian General Qassem Soleimani ordered a renewed social media operation using Iran-backed forces based in neighboring Baghdad.

Amid the protests that spawned in the later months of last year throughout Iraq urging for the removal of Iran's influence, scores of fake pages flooded Arabic-speaking accounts targeting journalists covering the unrest as "agents of the CIA" and vowed they were behind the unrest, thus endangering lives.

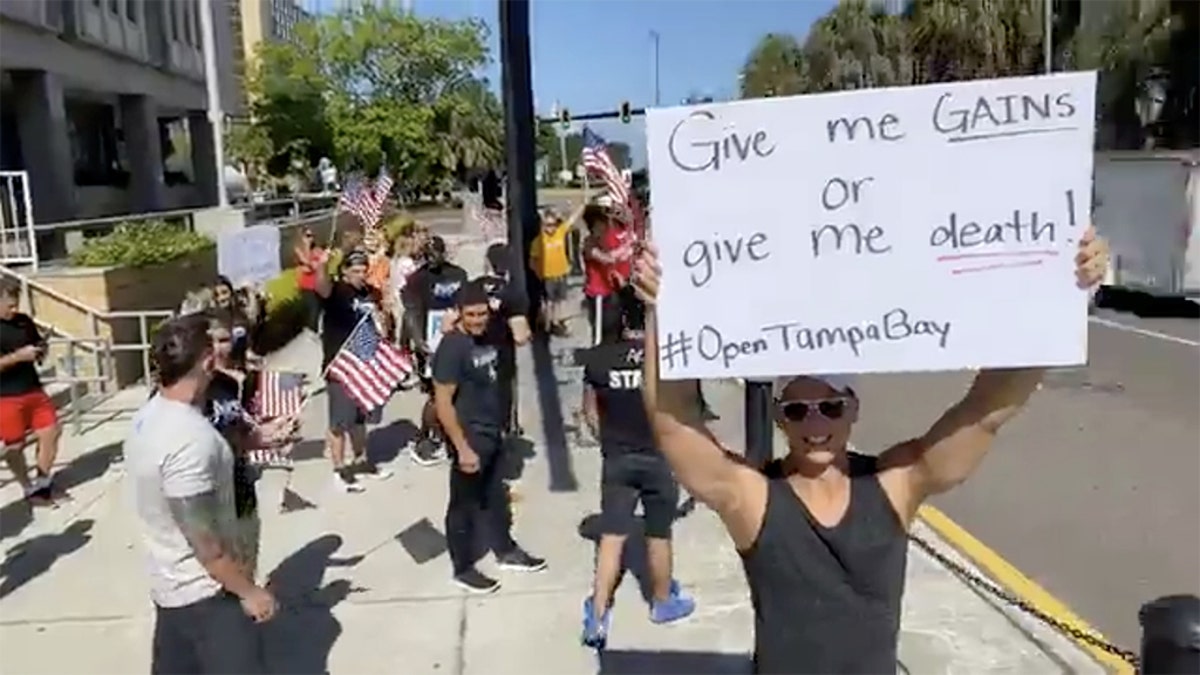

"That puts us in a lot of danger, and many of these pages still run," Nabil said. "And its especially ironic that Facebook is OK with 'Death to America' but wants to censor Americans trying to get back to work."

People protest for re-opening the economy at a faster rate outside the Old Pinellas County Courthouse, amid the coronavirus crisis, in Clearwater, Florida, U.S. May 11, 2020 in this still image taken from a social media video. (JOZEF GHERMAN/ FACEBOOK/ OPEN TAMPA BAY/via REUTERS)

Indeed, some Facebook critics have raised concerns over the Silicon Valley giant's role around protests as hypocritical, if not utterly confusing.

In late April, Facebook took down event pages pertaining to organized rallies against the stringent stay-at-home orders as a result of the coronavirus pandemic, and later said it did so only in states where strict social distancing guidelines were in place.

FACEBOOK WON'T ALLOW PROMOTION OF PROTESTS 'THAT DEFY GOVERNMENT'S GUIDANCE' ON SOCIAL DISTANCING

However, its selective content response is symptomatic of a more profound schism within its censorship framework. So how does the flagging and content removal process work?

"Facebook states that it is currently working to reduce the spread of fake news with machine learning that 'predicts what stories may be false' and by reducing the distribution of content 'rated as false' by independent third-party fact-checkers. There are several problems with this," observed Kristen Ruby, social media consultant and president of the New York-based Ruby Media Group. "Every human being has a level of internal bias. So whatever the fact-checkers say is false is flagged as false. Meaning, they are engaging in fact-checking, but everyone's views of facts are distorted by their own personal opinion."

In her view, this is why it is critical for Facebook and big tech companies to decide if they are truly publishers or if they are neutral parties, because "you can't be both."

Yet trial attorney Kelly Hyman argued that Facebook is actually becoming safer for its some 2.6 billion users through its monitoring of violations – including fraud and deception, coordinating harm and publicizing crime, bullying and harassment, hate speech and cruel and insensitive content – by employing artificial intelligence (AI), community feedback and content review teams.

"The crackdown ranges from removing the content to disabling the account, to covering the content with a warning, and cases that pose harm to public safety, law enforcement is involved," she said. "But, the company may need to step up their efforts."

In this Oct. 17, 2019, file photo Facebook CEO Mark Zuckerberg speaks at Georgetown University in Washington. (AP Photo/Nick Wass)

While Facebook does apply labels to so-called misleading posts, it exempts from review posts by politicians, a mandate that some lawmakers claim perpetuates the spread of falsities and adds to a dizzying policy.

"Where it has gone wrong in recent years is a matter of perspective – some policymakers and thought leaders believe that it should not censor any content while others believe it should censor far more than it currently does," conjectured Heather Heldman, a former advisor to the U.S. State Department and managing partner at the Luminae Group.

Unlike Twitter, Facebook outsources its fact-checking to media partners and says it takes no stance itself.

And as for the future of Facebook amid Trump's legislative push, some say that regardless of how it plays out, it is time for a reboot.

"Why should big tech platforms have an unfair business advantage and be immune from lawsuits when other businesses are not afforded this advantage? This law has not been changed in 24 years. It is important that we review this policy to see if it still makes sense for the 2020 digital economy and ecosystem we live in and beyond," Ruby added. "You can't say on the one hand that you are not responsible for the content that appears on your platform, but on the other say, here is a list of 50 reasons why we will delete your content."

Facebook did not respond to a request for comment.